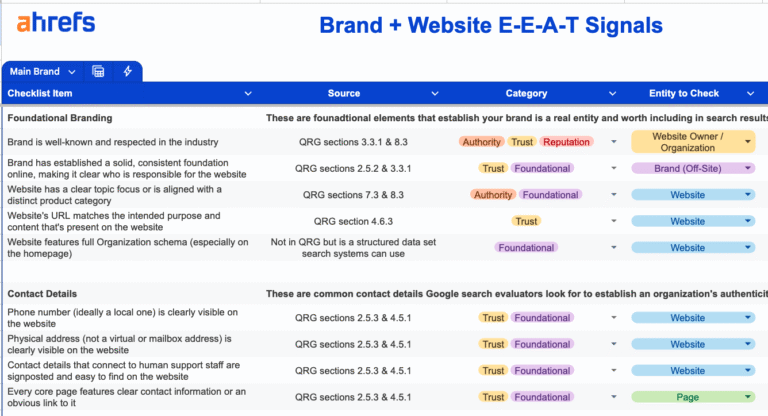

Architectural strain becomes visible when container density increases, latency margins shrink, and failures stop being theoretical. Teams encounter cascading restarts from kernel level faults, inconsistent performance during traffic spikes, or audit findings that question how isolation is enforced. At this stage, the choice between containerization and virtualization on bare metal is no longer academic. It defines how risk propagates, how predictable systems remain under load, and how confidently platforms can grow.

Bare Metal as the Common Execution Layer

Bare metal is the physical server itself: CPU, memory, storage, networking, firmware, and the operating system that initializes them. Both virtual machines and containers ultimately execute on this same foundation.

The difference lies in how control and responsibility are distributed.

Virtualization introduces a hypervisor that mediates access to hardware. It partitions resources, enforces isolation, and defines failure domains. Containers operate at the operating system level, sharing a single kernel while isolating processes through namespaces and resource controls.

On bare metal, this distinction becomes more consequential because there is no upstream abstraction unless one is deliberately added. Every design choice directly impacts stability, security boundaries, and operational flexibility.

Isolation Models: Where Failures Are Contained

Isolation is defined by what still works when something goes wrong.

Virtual machines isolate workloads by design. Each VM has its own kernel and virtualized hardware context. If a guest operating system is compromised or crashes, the failure is typically contained within that VM. Crossing into another workload requires breaching the hypervisor, which remains one of the strongest isolation mechanisms in modern infrastructure.

Containers isolate workloads within a shared kernel. Namespaces separate processes and networking. Control groups limit resource usage. Capabilities restrict privileged operations. These mechanisms are efficient, but they do not create a hard security boundary.

On bare metal, container only isolation introduces structural exposure:

- All workloads depend on the same kernel

- Kernel vulnerabilities affect every container

- Privileged or misconfigured containers increase host level risk

- A kernel panic takes down all services simultaneously

This is why containers alone are rarely sufficient for multi tenant, regulated, or mixed trust environments. Many production platforms reintroduce virtualization not for performance, but to define a clear blast radius.

Containerization vs Virtualization Performance on Bare Metal

Performance differences once justified avoiding virtualization. Today, that justification is increasingly narrow.

Modern hypervisors leverage hardware assisted virtualization, optimized I/O paths, and direct device passthrough. For most enterprise workloads, the performance gap between virtual machines and bare metal is now minimal.

Real world observations show:

- CPU and memory performance within a few percent of native

- High throughput networking with SR-IOV and paravirtual drivers

- GPU workloads achieving near bare metal performance with passthrough or vGPU

Containers running directly on bare metal do remove one abstraction layer, but the benefit is workload specific. For most web applications, APIs, databases, and microservices, the difference is operationally irrelevant compared to the gains in reliability and control offered by virtualization.

Bare metal containers still matter when:

- Latency tolerance is measured in microseconds

- Hardware access must be fully deterministic

- Edge environments cannot afford additional layers

Outside these cases, performance alone rarely drives the decision.

Resource Control and Predictability Under Load

Bare metal resources are finite. How they are enforced determines service quality.

Containers prioritize efficiency. CPU shares and memory limits are flexible, allowing higher density but increasing contention risk. Under pressure, workloads can starve each other, and kernel level resource exhaustion can cascade across services.

Virtual machines enforce hard boundaries. Allocated memory is reserved. CPU scheduling is enforced independently. One workload cannot consume another’s resources, even during spikes.

This distinction matters when:

- Internal SLAs must be met

- Workloads vary in behavior and priority

- Platform teams need predictable failure domains

On bare metal, predictability often outweighs maximal utilization.

Operational Reality at Scale

As platforms grow, operational risk eclipses theoretical efficiency.

Running containers directly on bare metal ties application availability to host maintenance. Kernel upgrades, driver changes, or firmware updates require careful coordination and downtime planning.

Virtualization decouples workloads from hardware lifecycle:

- Hosts can be patched or replaced without stopping services

- Kubernetes clusters can span multiple isolated nodes cleanly

- Upgrades and rollbacks become safer and more repeatable

This separation is why most large scale Kubernetes platforms, including managed services, standardize on virtual machines even when bare metal is available.

Where Dataplugs Bare Metal Fits into Modern Architectures

Bare metal is most valuable when it enables architectural choice rather than enforcing one model.

Dataplugs bare metal servers provide a stable, isolated hardware foundation that supports both virtualization and containerization strategies. Teams can deploy hypervisors, Kubernetes clusters, or hybrid stacks based on workload requirements, not provider constraints.

This approach is particularly effective for:

- Private Kubernetes platforms requiring strong isolation

- High performance workloads that still need governance

- Organizations transitioning from VM based systems to containerized architectures

- Long running services that demand consistent performance

By controlling the physical layer, platform teams retain flexibility at every higher layer without sacrificing predictability.

Conclusion

Containerization vs virtualization is fundamentally a decision about trust boundaries. On bare metal, those boundaries are either explicitly enforced or implicitly shared.

Containers deliver speed and efficiency by trusting the kernel. Virtual machines deliver isolation and predictability by inserting a deliberate control layer. Modern infrastructure strategies recognize that combining both often produces the most resilient outcome.

For organizations operating performance sensitive, compliance aware, or growth oriented platforms, the goal is not choosing sides, but aligning architecture with real operational constraints. Dataplugs bare metal servers provide the foundation to make those decisions deliberately and sustainably.

For more information, Dataplugs can be contacted via live chat or email at sales@dataplugs.com.