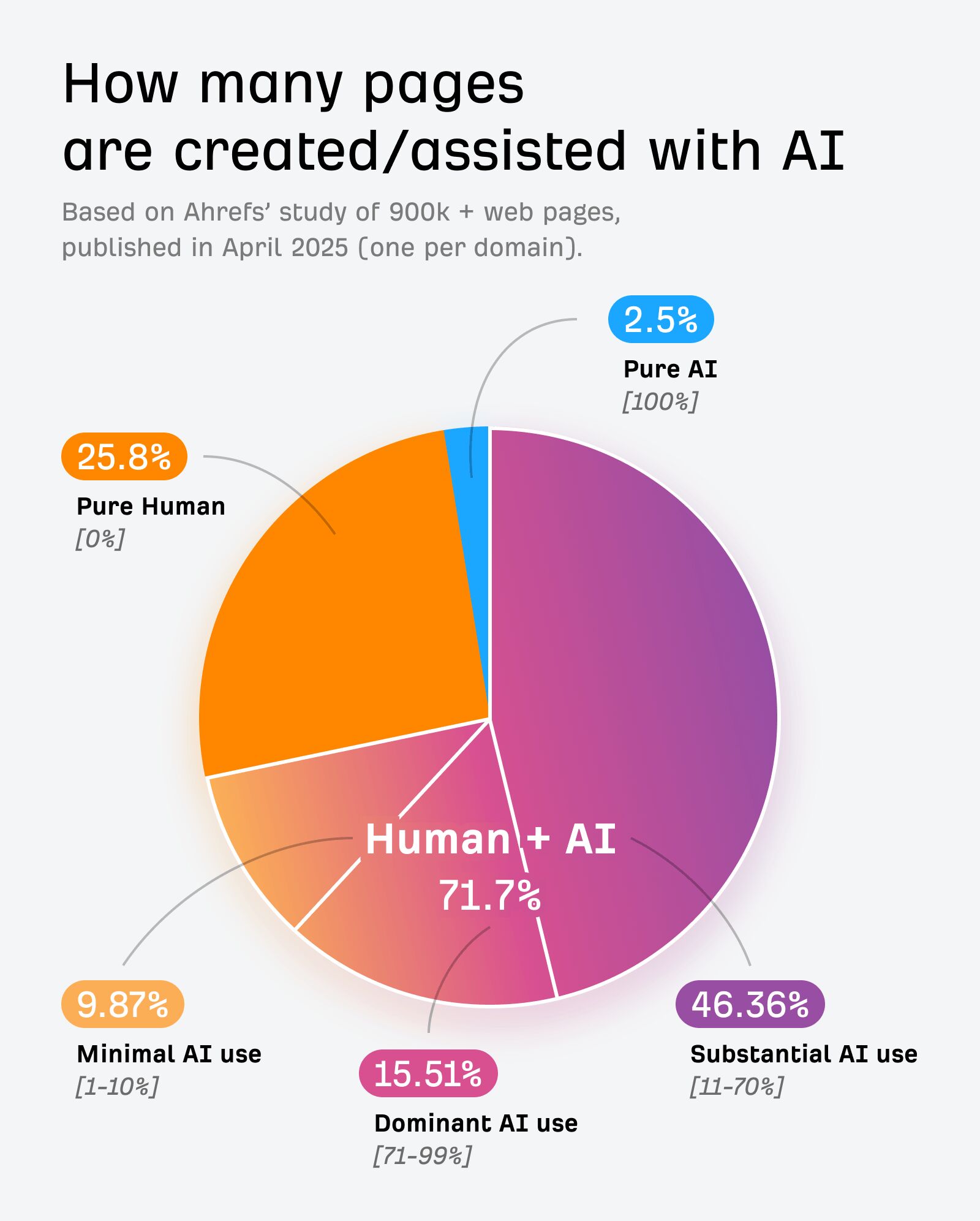

In April 2025, we analyzed 900,000 newly created web pages and discovered that 74.2% contained AI-generated content.

With the rapid growth of generative AI, businesses, educators, and publishers are asking a critical question: how can we tell what’s written by humans and what’s produced by machines?

The answer: it’s possible, but not foolproof. Here’s how to approach AI detection effectively, the limitations you need to understand, and a better way to get more reliable results.

Learn more in our study: 74% of New Webpages Include AI Content (Study of 900k Pages)

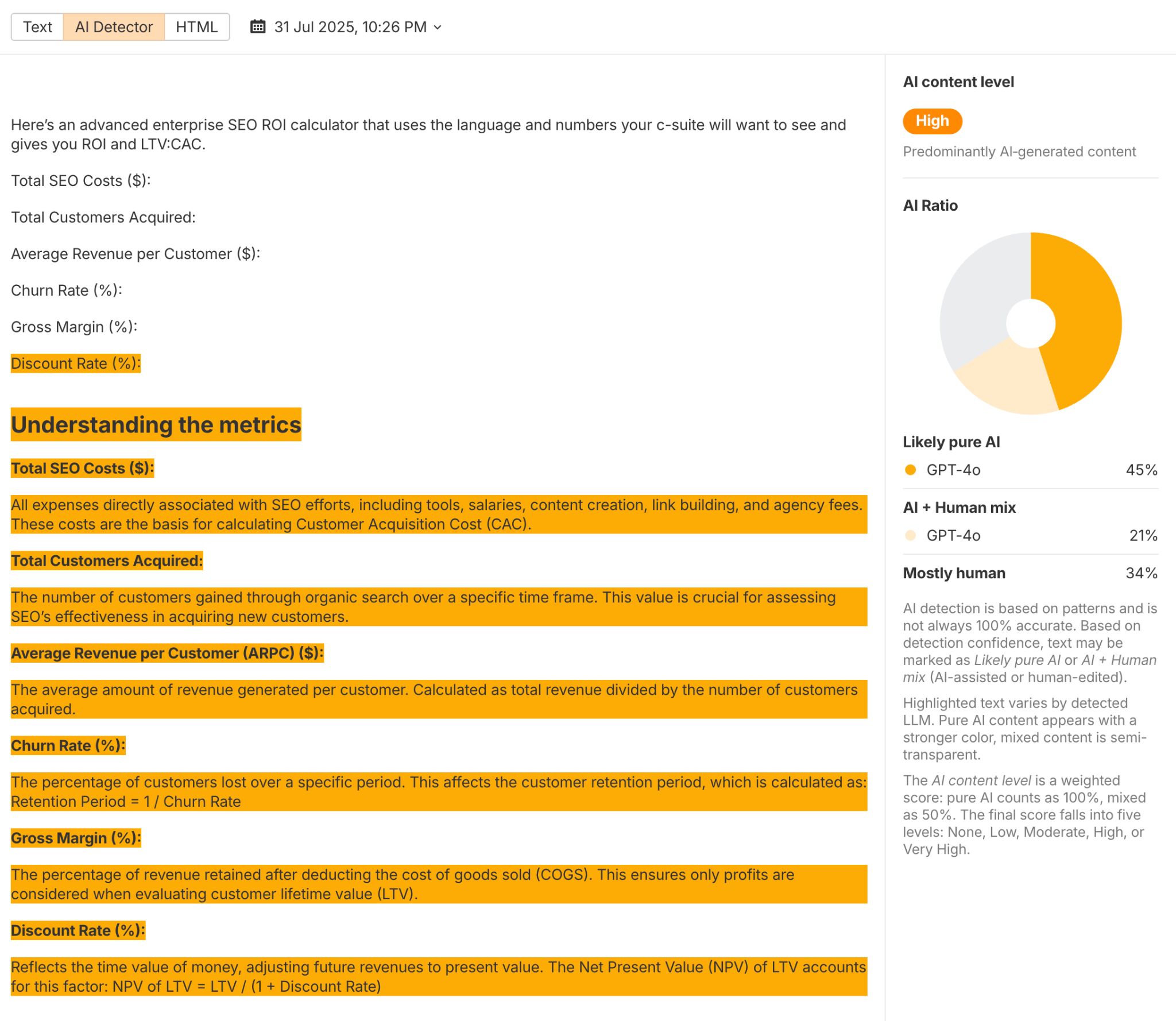

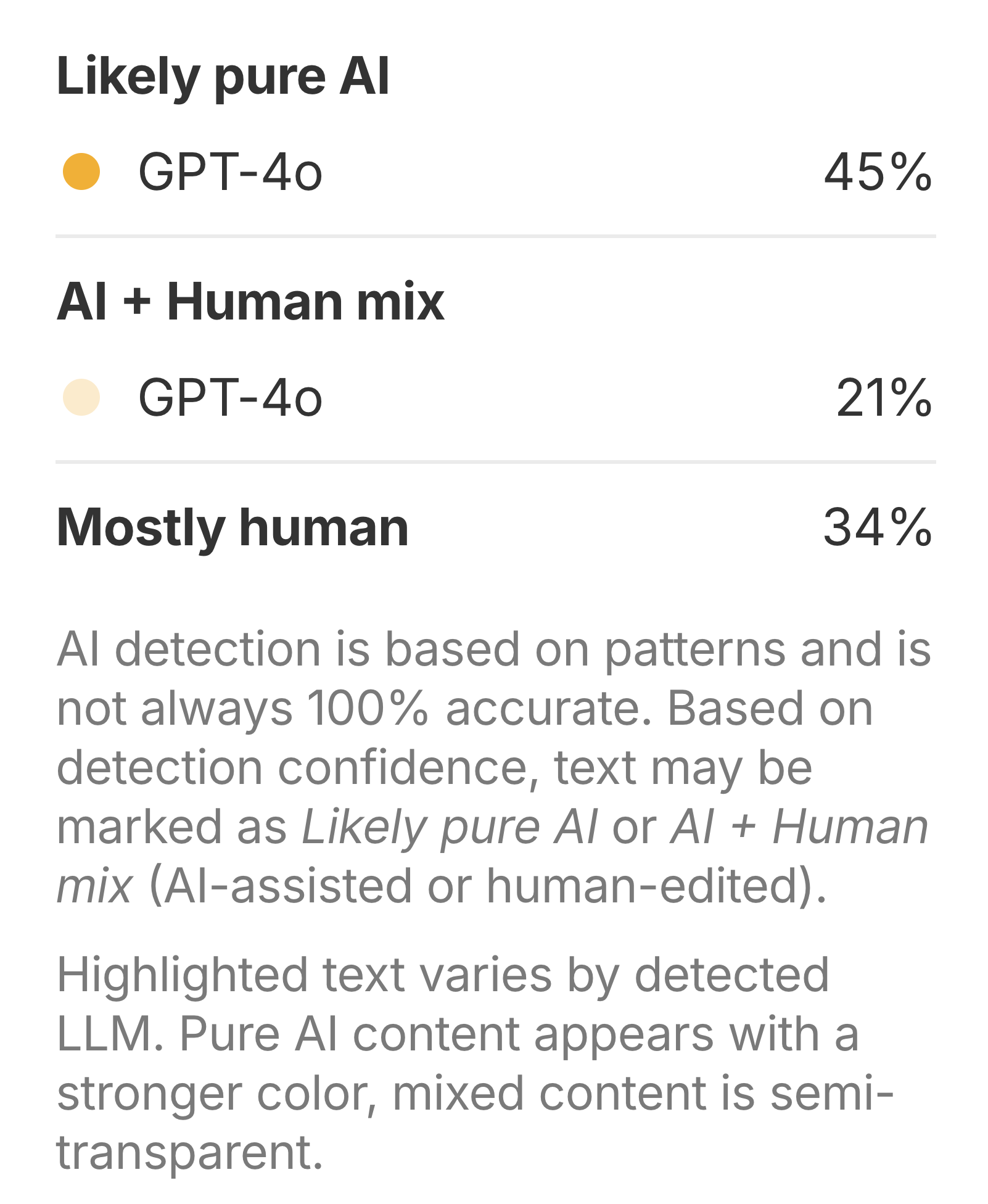

Good detectors don’t just give you a single yes-or-no verdict: they also break the text down and show you the likelihood that different passages are AI-generated, provide an overall article-level likelihood score, and in some cases even attempt to identify which models (such as GPT-4o) were likely used to create the content.

We ran a small-scale test comparing several of the most popular AI detectors to see how they perform in practice. The table below shows our results:

Based on my testing, Ahrefs’ AI detector and Copyleaks were the best-performing AI detectors, with GPTZero and Originality.ai close behind. At the other end of the scale, Grammarly and Writer performed the worst in my testing.

| AI content detector | Score |

|---|---|

| Ahrefs | 13/18 |

| Copyleaks | 13/18 |

| GPTZero | 12/18 |

| Originality.ai | 12/18 |

| Scribbr | 10/18 |

| ZeroGPT | 9/18 |

| Grammarly | 6/18 |

| Writer | 4/18 |

Learn more in my full write-up: The 8 Best AI Detectors, Tested and Compared

Like LLMs, AI detectors are probabilistic—they estimate likelihood, not certainty. They can be highly accurate, but false positives are inevitable. That’s why you shouldn’t base decisions on a single result. Run multiple checks, look for patterns, and combine findings with other evidence.

All AI detectors share the same fundamental limitations, regardless of the tool or technology used.

- Heavily edited or “humanized” AI text may evade detection. “Post-processing’ (things like rephrasing sentences, swapping in synonyms, rearranging paragraphs, or running the text through a grammar checker) can disrupt the statistical signals that detectors look for, reducing their accuracy.

- Basic detectors may lack accuracy and advanced features. Detection tools require frequent updates to stay ahead of new AI models—generative AI evolves quickly, and detectors need regular retraining to recognize the latest writing styles and avoidance techniques. At Ahrefs, our detector supports multiple leading models, including models from OpenAI, Anthropic, Meta, Mixtral, and Qwen, so you can check content against a broader range of likely sources.

- Effectiveness varies by language, content type, and model. Detectors trained primarily on English prose may struggle with technical writing, poetry, or less common languages.

- Ambiguous cases (like human text edited by AI) can blur results. These hybrid workflows create mixed signals that can confuse even advanced systems.

- Even the best tools can produce false positives or negatives. Statistical detection is never infallible, and occasional misclassifications are inevitable because the patterns these systems rely on can overlap between human and AI writing, and subtle edits or atypical writing styles can easily blur the distinctions.

Remember: false accusations based on incorrect AI detection results can seriously damage the reputation of individuals, companies, or academic institutions.

With these limitations in mind, it’s a good idea to corroborate any detector output with additional methods before drawing conclusions.

Human judgment can be extremely helpful for adding context to results from AI detectors. By examining context—such as patterns across multiple articles, a history of posts on social media, or the surrounding circumstances of publication—you can better gauge the likelihood that AI was involved in the writing.

Signs to look for:

- Overly consistent tone with no subtle quirks. Human writing is inherently a bit messy and unpredictable, with small variations in style, rhythm, and word choice that reflect personality and context. AI-generated text can sometimes lack these imperfections, producing a uniform tone that feels slightly too polished or mechanical.

- Verbosity. AI is very good at stretching simple ideas into long-winded explanations.

- Lack of new information. AI outputs often read as generic or surface-level (this is particularly obvious on LinkedIn: many AI-generated comments simply restate the original author’s idea in new words without adding any meaningful perspective or value).

- Tell-tale word choices. AI has a preference for slightly “off” idioms like “ever-evolving landscape”, formulaic hooks (“This isn’t X… it’s Y”), or overuse of em dashes and emojis.

- Incentives. Is there a clear motivation for the author to use AI content?

I see you, ChatGPT.

None of these signs offer definitive evidence for AI content, but they can add helpful context to other forms of evidence.

If you run an AI detector on just one article, an unreliable result can be problematic. But that issue becomes less important when you look at results at scale. Running this process across many pages gives you a much clearer picture of how AI is used as part of the company’s broader marketing strategy.

With Ahrefs’ Top pages report in Site Explorer, you can see an “AI Content Level” column for almost any website page. From there, you can even inspect any individual URL and get an idea of the AI models that were likely used in the page’s creation.

Here’s a video talking through this process:

For a quick tip: use this report to spot top-ranking, heavily AI-generated content and consider creating your own AI version. If it’s ranking, it’s meeting search intent—making it a potential opportunity for you, and your AI content workflow.

Further reading

Similar Posts

Massed Compute Secures $300M from Digital Alpha for AI Cloud Growth

Massed Compute, a provider of GPU-as-a-Service for AI and high-performance computing workloads, has secured a strategic investment from…

Top 15 Cold Wallet Black Friday 2025 Deals (Updated Daily!)

Black Friday Deals: Tangem (30% OFF + Free Bitcoin): https://cyberscrilla.short.gy/TBFD Ledger (Up to 50% OFF): https://cyberscrilla.short.gy/ledger…

Only crypto investors know this feeling….

Don’t wait until it’s too late. Get a cold wallet. Self custody your crypto TODAY!

End-to-End TLS: Perfect Forward Secrecy, OCSP Stapling on Servers

In today’s digital ecosystem, where encrypted communication is the norm, SSL/TLS optimization has become a business-critical…

How to Automatically Generate Image Alt Text in WordPress Using AI

Remember when adding alt text to images meant spending countless hours writing descriptions by hand? I…

Stop holding your crypto on these apps!

Join me on my livestream where I’ll be taking your questions live and helping you choose…