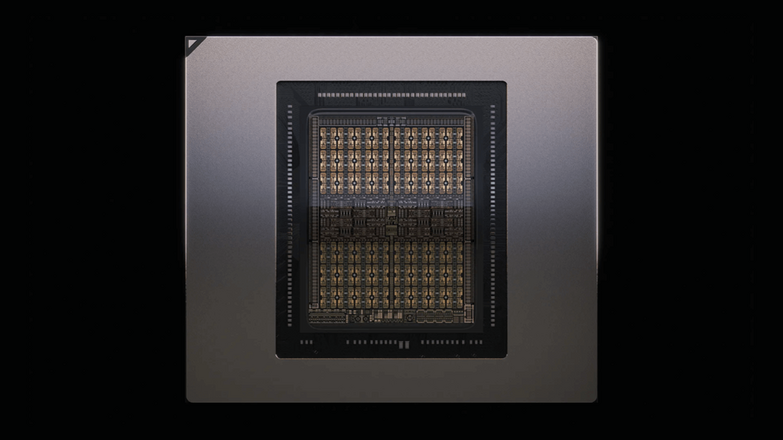

NVIDIA is preparing to expand the boundaries of artificial intelligence infrastructure with the introduction of the Rubin CPX, a new GPU designed specifically for handling exceptionally long context windows. The processor, part of the company’s upcoming Rubin series, is expected to become available by the end of 2026 and is tailored for workloads that demand processing power across sequences extending to more than one million tokens.

The Rubin CPX is built with a focus on disaggregated inference, an approach that separates the distinct stages of AI inference into compute-bound and memory bandwidth-bound operations. This separation allows specialized hardware to target each phase more effectively, addressing the new wave of challenges in AI applications.

The context phase, where large amounts of input data must be absorbed and analyzed to generate the first token output, is compute-intensive. The generation phase, in contrast, is constrained by memory bandwidth, requiring high-speed interconnects and rapid data transfers to sustain token-by-token performance. The CPX is intended to accelerate the context phase, delivering higher throughput and efficiency in scenarios where existing infrastructure is nearing its limits.

These long-context requirements are increasingly common in advanced use cases such as software development, research, and film production. For instance, AI systems designed to act as collaborators in programming need to reason across entire codebases, maintaining coherence across files and functions. Similarly, long-form video projects or scientific research require persistent memory and logical continuity across vast sequences of data. NVIDIA’s Rubin CPX, with its 128 GB of GDDR7 memory, hardware-accelerated video processing, 30 petaFLOPs of NVFP4 compute capacity, and three times the attention acceleration compared with the NVIDIA GB300 NVL72, has been engineered to meet these demands.

Inference Infrastructure

NVIDIA’s launch of the Rubin CPX reflects broader changes in how inference infrastructure is conceived. The company’s SMART methodology – focused on scalability, return on investment, multidimensional performance, and architectural efficiency – emphasizes full-stack integration. The CPX complements platforms such as the Blackwell and GB200 NVL72 systems and integrates with open source frameworks like TensorRT-LLM and Dynamo. NVIDIA Dynamo plays a crucial role in orchestrating disaggregated inference, ensuring efficient routing, memory management, and low-latency key-value cache transfers, which were instrumental in the company’s recent performance benchmarks.

The Rubin CPX does not stand alone. It is designed to work alongside other components in NVIDIA’s ecosystem, including Vera CPUs and Rubin GPUs, forming part of larger rack-level deployments. One such configuration, the Vera Rubin NVL144 CPX rack, integrates 144 Rubin CPX GPUs, 144 Rubin GPUs, and 36 Vera CPUs. This setup offers up to 8 exaFLOPs of NVFP4 compute power, 100 terabytes of high-speed memory, and 1.7 petabytes per second of memory bandwidth – figures that surpass the GB300 NVL72 by more than sevenfold. Connectivity is enabled through NVIDIA Quantum-X800 InfiniBand or Spectrum-X Ethernet, with SuperNICs and orchestration handled by Dynamo.

For enterprises, the implications are economic as much as technical. The Rubin CPX and its rack-level implementations are positioned as redefining inference economics, with projections of 30–50 times return on investment at scale. NVIDIA estimates that a $100 million capital expenditure could translate into as much as $5 billion in revenue, highlighting the potential impact for organizations betting on next-generation generative AI.

The introduction of the Rubin CPX underscores NVIDIA’s continued dominance in the AI infrastructure market. With data center sales reaching $41.1 billion in its most recent quarter, the company’s aggressive development cycle shows no signs of slowing. By targeting long-context inference – a rapidly growing bottleneck for many AI systems – NVIDIA is positioning the Rubin series as the backbone of applications that demand more than incremental improvements in performance.

As AI workloads become more complex, involving multi-step reasoning, permanent memory, and agentic systems with long-horizon tasks, infrastructure must adapt. The Rubin CPX and its associated platforms represent NVIDIA’s answer to this new phase of AI development, blending raw compute with orchestration, memory management, and high-performance networking to deliver scalable, efficient, and economically viable solutions for enterprises and developers worldwide.