“Chunk optimization” is the latest shiny object in SEO, but it’s not the shortcut to visibility in AI search that many think it is.

Across LinkedIn threads and AI‑SEO guides, the promise is the same: format your content into perfect “chunks” and you’ll get chosen for Google’s AI Overviews or cited in AI search results.

The problem?

Chunk optimization isn’t actually an SEO tactic. It’s a technical term borrowed from AI engineering—misunderstood, misapplied, and mostly out of your hands.

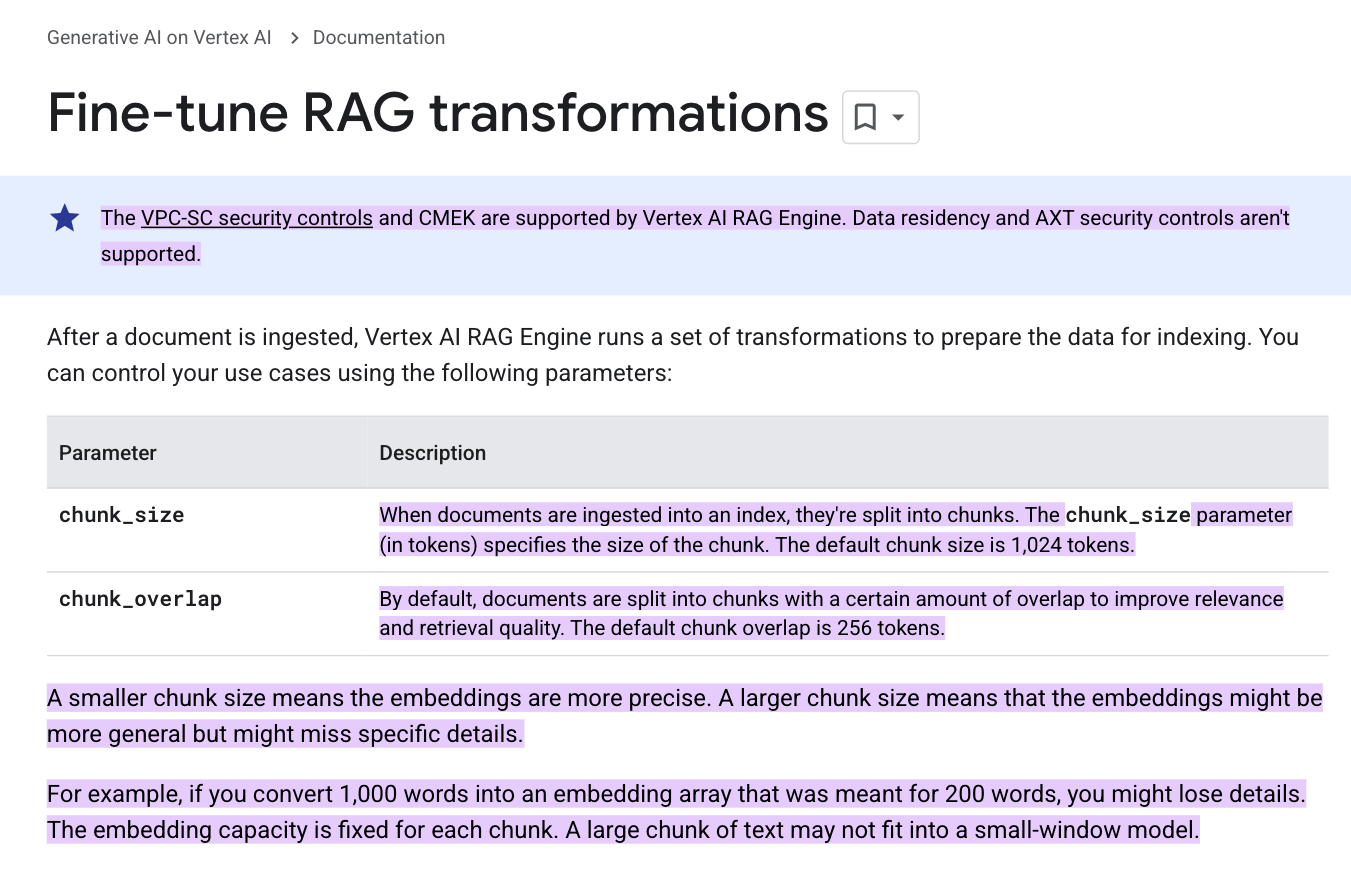

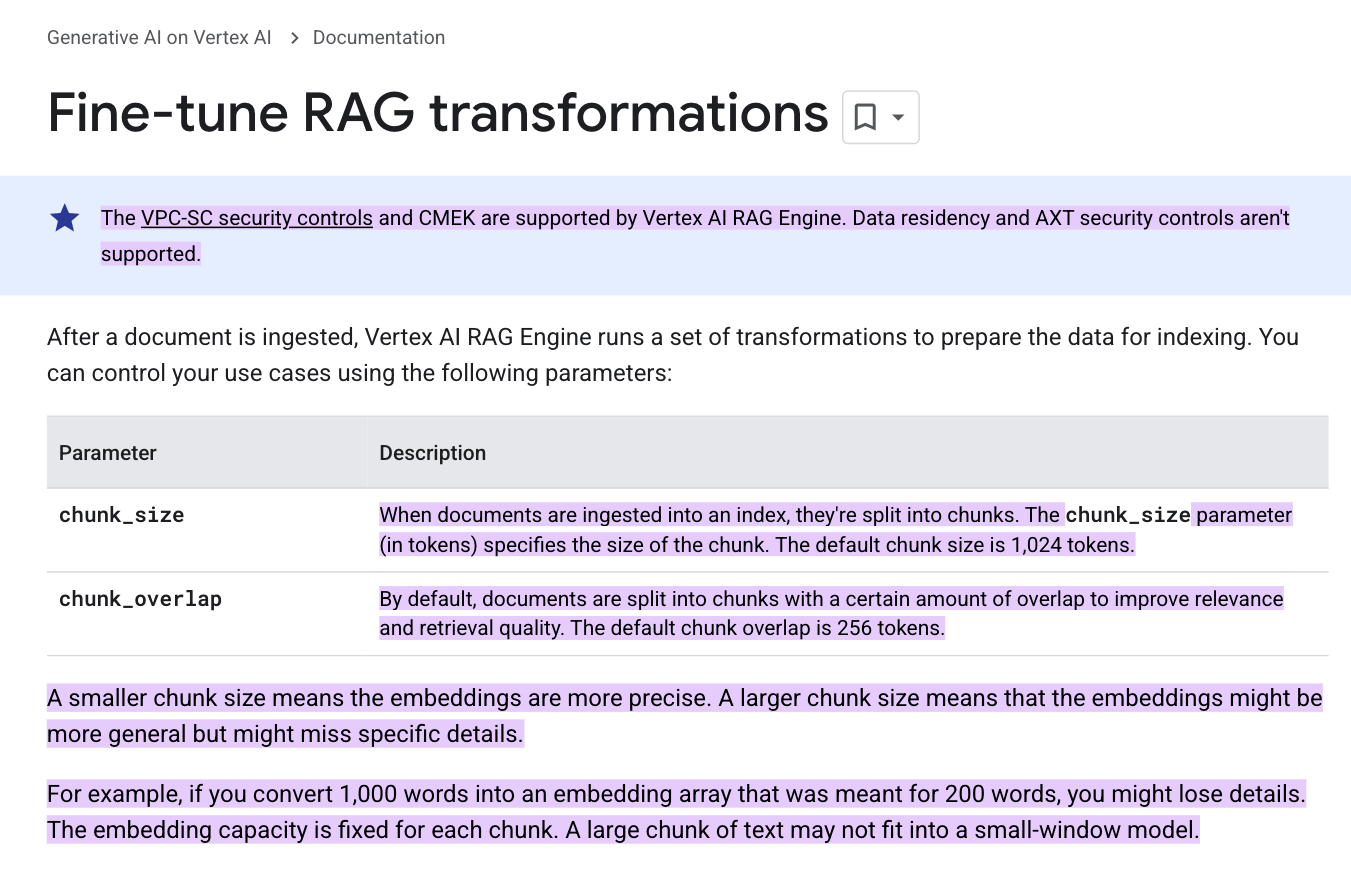

Vertex AI’s preferences:

Over time, each model becomes hyper‑personalized to its own performance needs.

AI engineers are incentivized to find even small efficiency gains because every fraction of a cent saved on compute can scale to millions of dollars at the production level.

They routinely test how different chunk sizes, overlaps, and strategies impact retrieval quality, latency, and cost, and make changes whenever the math favors the bottom line.

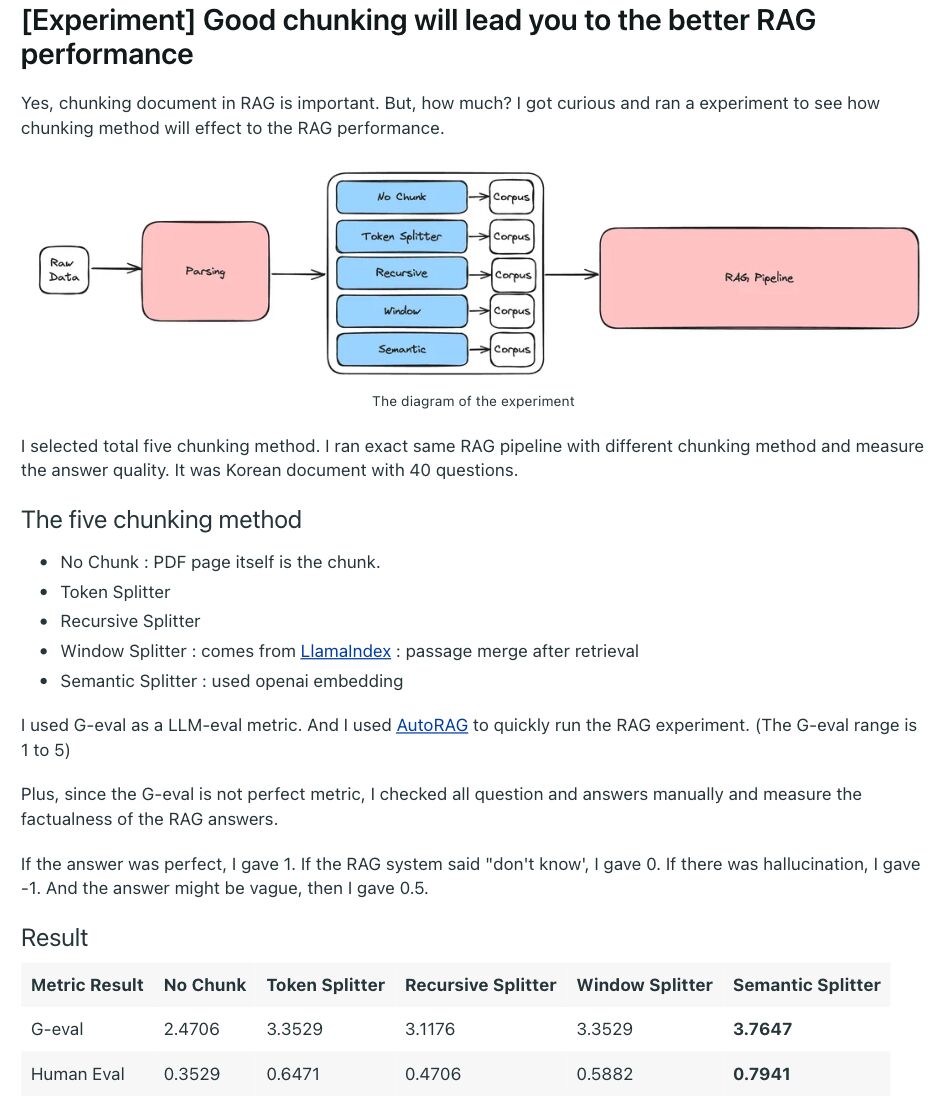

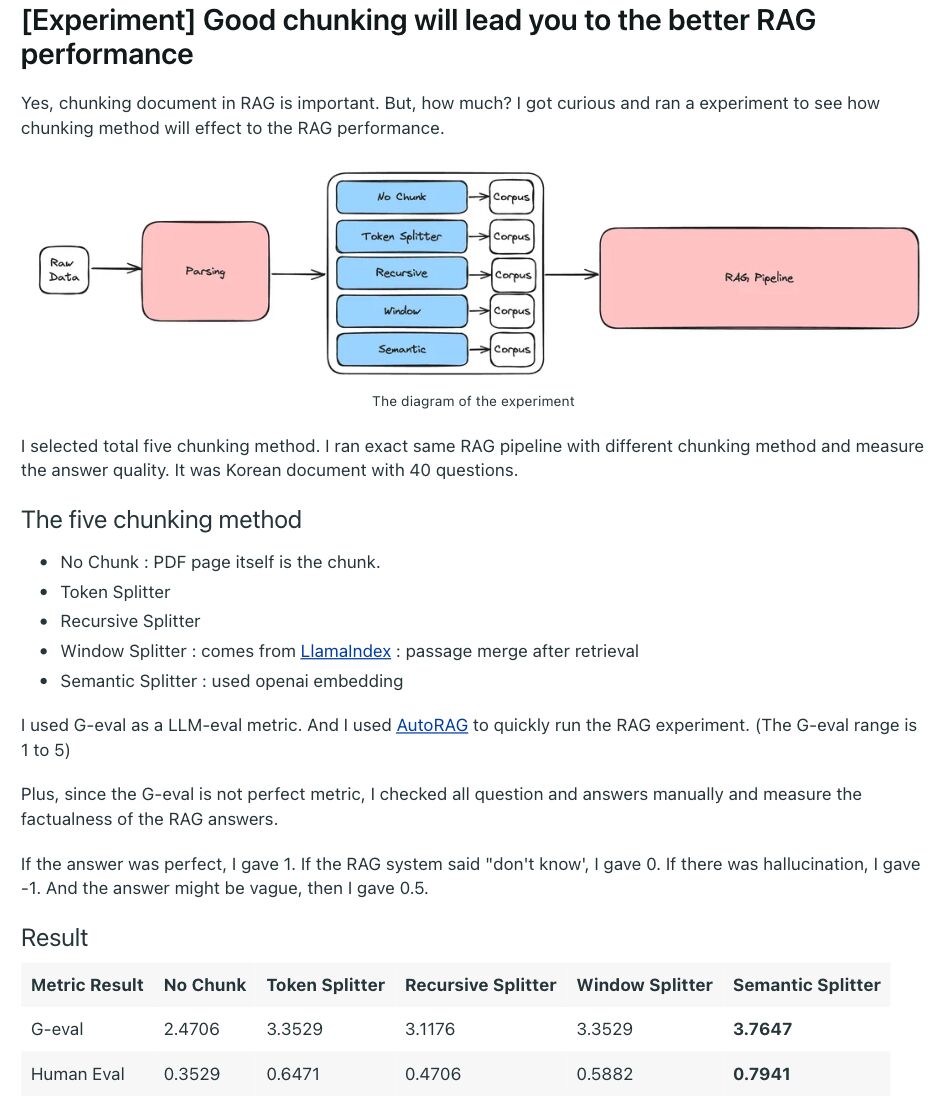

For example, here’s what such RAG performance experiments look like:

Engineers have run dozens of similar experiments, all testing the best chunking methods for various document types and model preferences.

This means any manual “optimization” you do today can be rendered irrelevant by a model update tomorrow.

3. Manual chunk optimization is a dead end

Ultimately, manual “chunk optimization” is impossible in practice.

Even if you try to write perfect chunks, you have no control over where a model will cut your content.

Chunking is based on tokens, not paragraphs or sentences, and each model decides its own split points dynamically. That means your carefully written intro sentence for a chunk might:

- Land in the middle of a chunk instead of at the start

- Get split in half across two chunks

- Be ignored entirely if the model’s retrieval slices around it

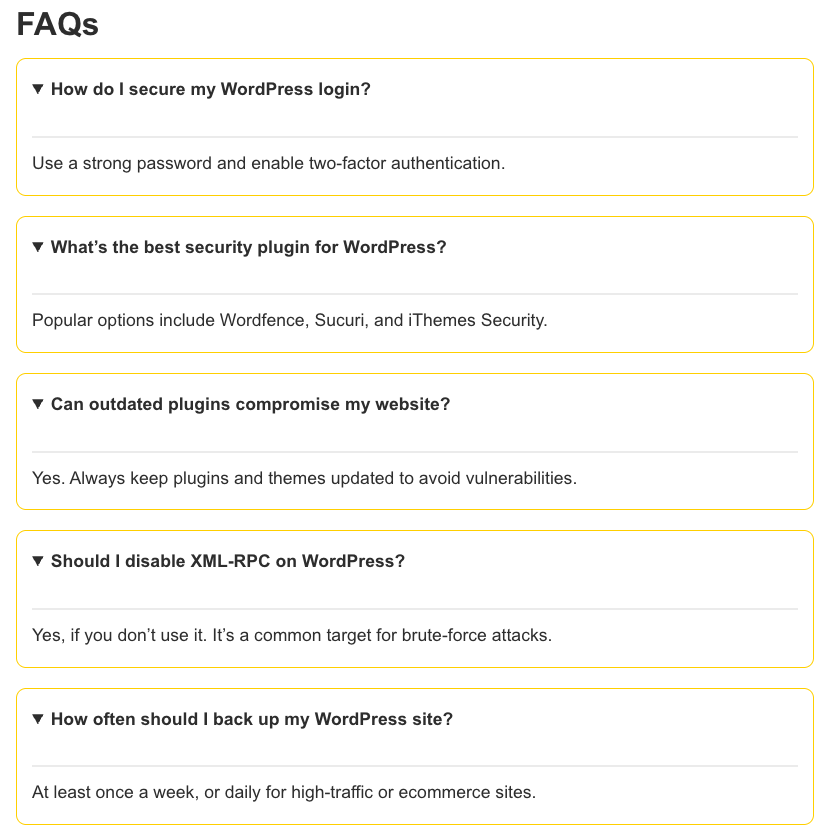

On top of that, Google has already shown what happens to sites that force formatting to game visibility. For example, FAQ‑style content farms once flooded search results with neatly packaged answers to win snippets and visibility.

After recent updates, Google demoted or penalized these sites when recognizing the pattern as manipulative, AI-generated slop, offering low value to readers.

Trying to pre‑structure content for hypothetical AI chunking is the same trap.

You can’t predict how LLMs will tokenize your page today, and any attempt to force it could backfire or become obsolete after the next model update.

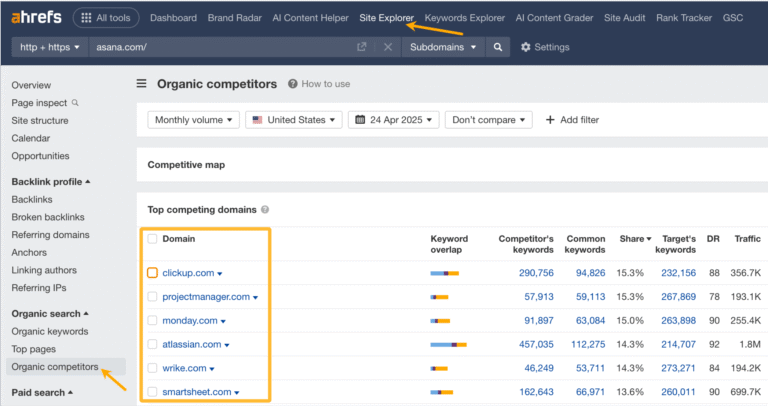

keyword and topic research.

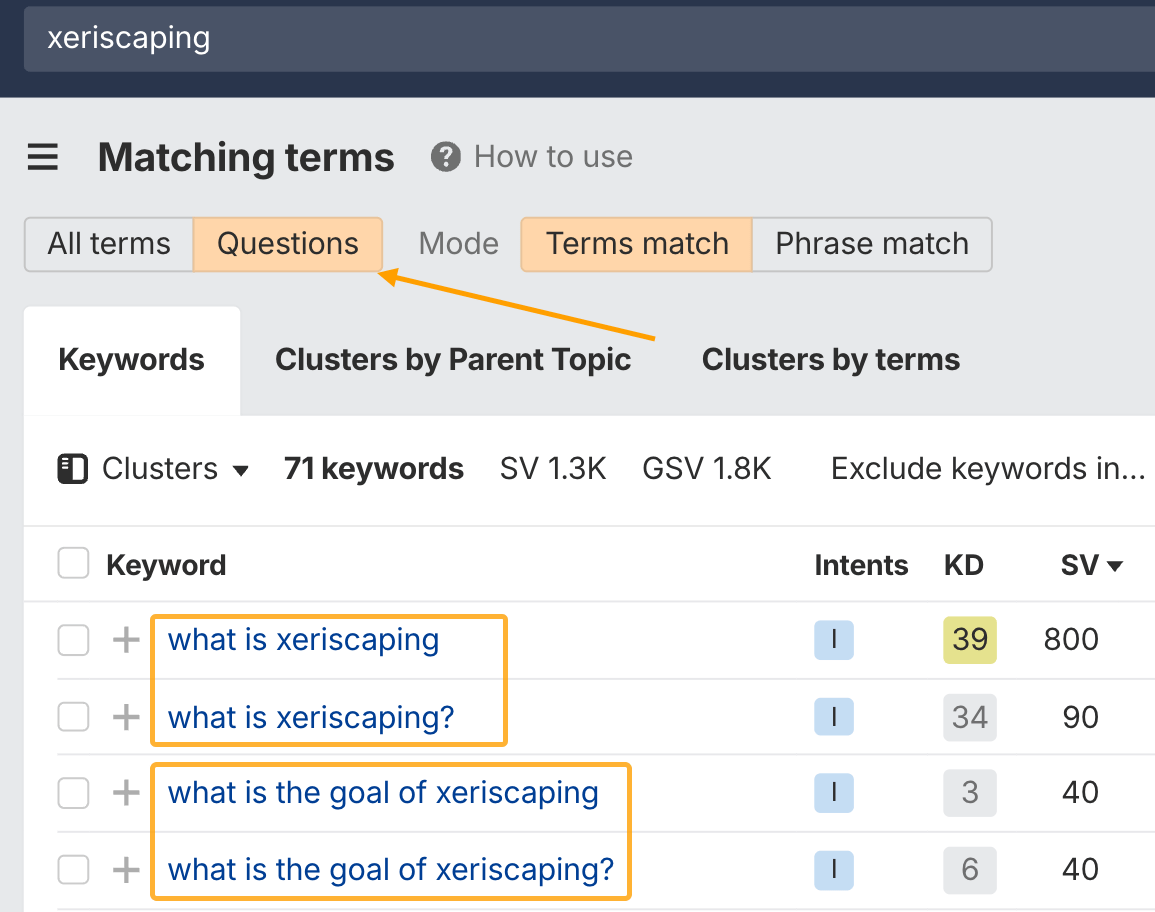

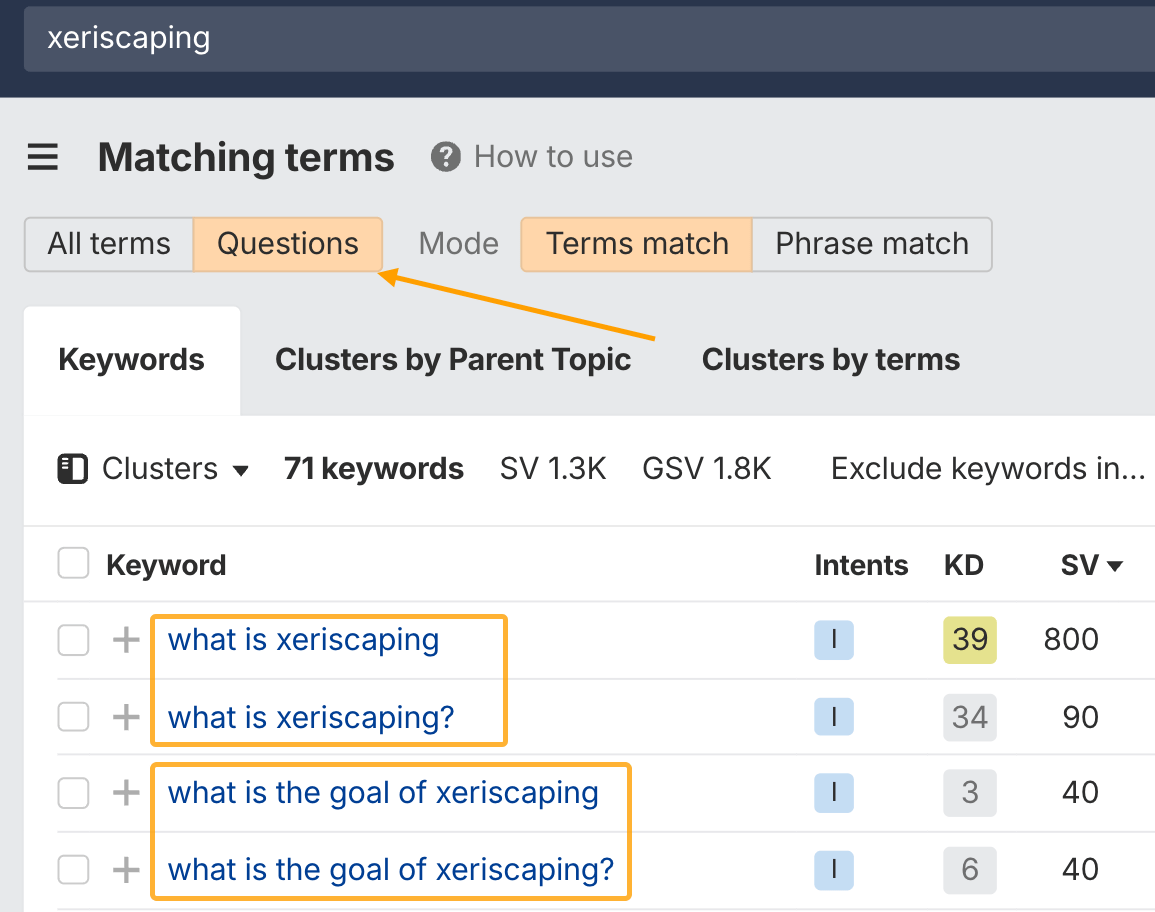

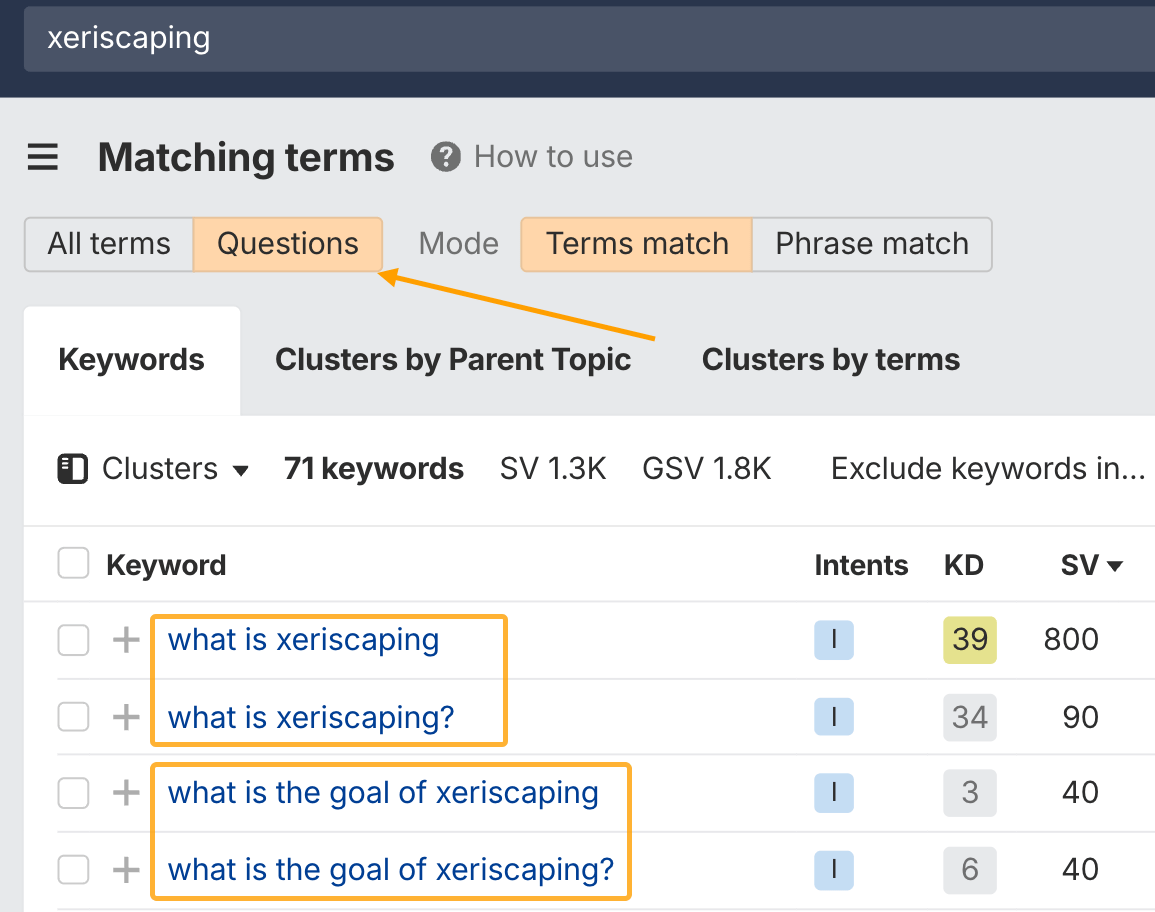

Using Ahrefs’ Keywords Explorer, you can find what questions or topics deserve their own sections within a page you’re writing.

For example, on the topic of xeriscaping (a type of gardening), you can answer questions like:

- What is xeriscaping?

- What’s the goal of xeriscaping?

- How much does xeriscaping cost?

- What plants are good for xeriscaping?

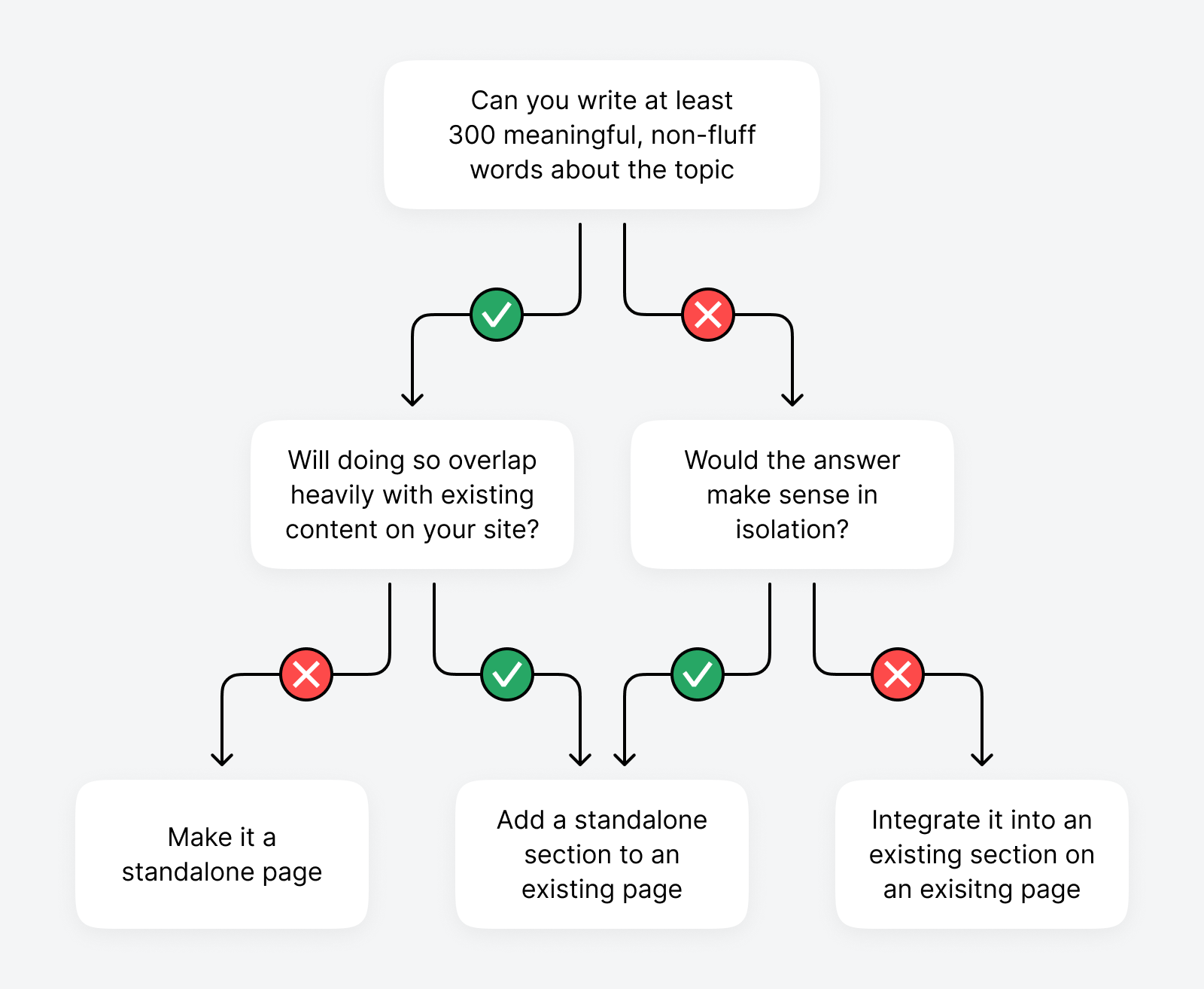

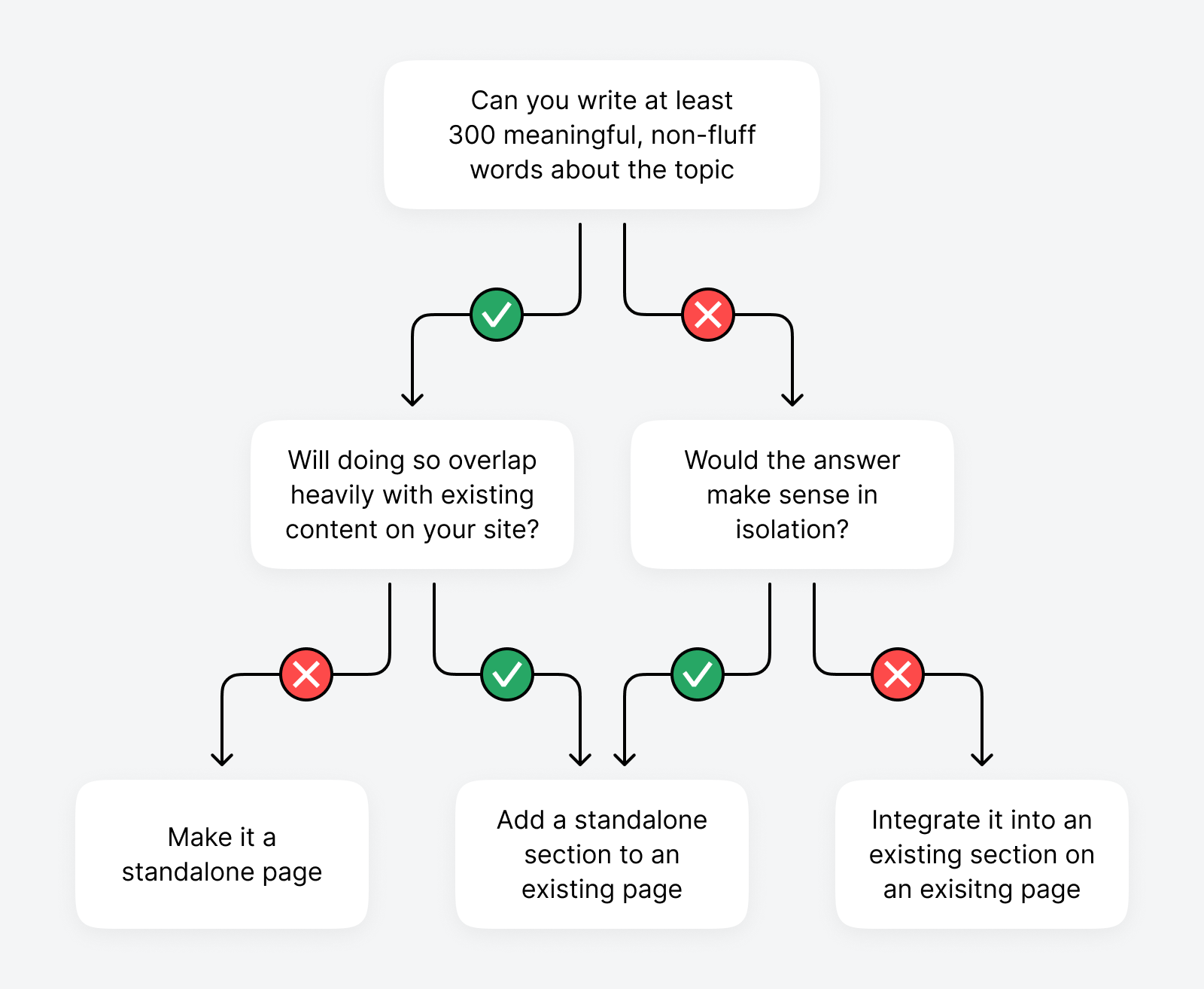

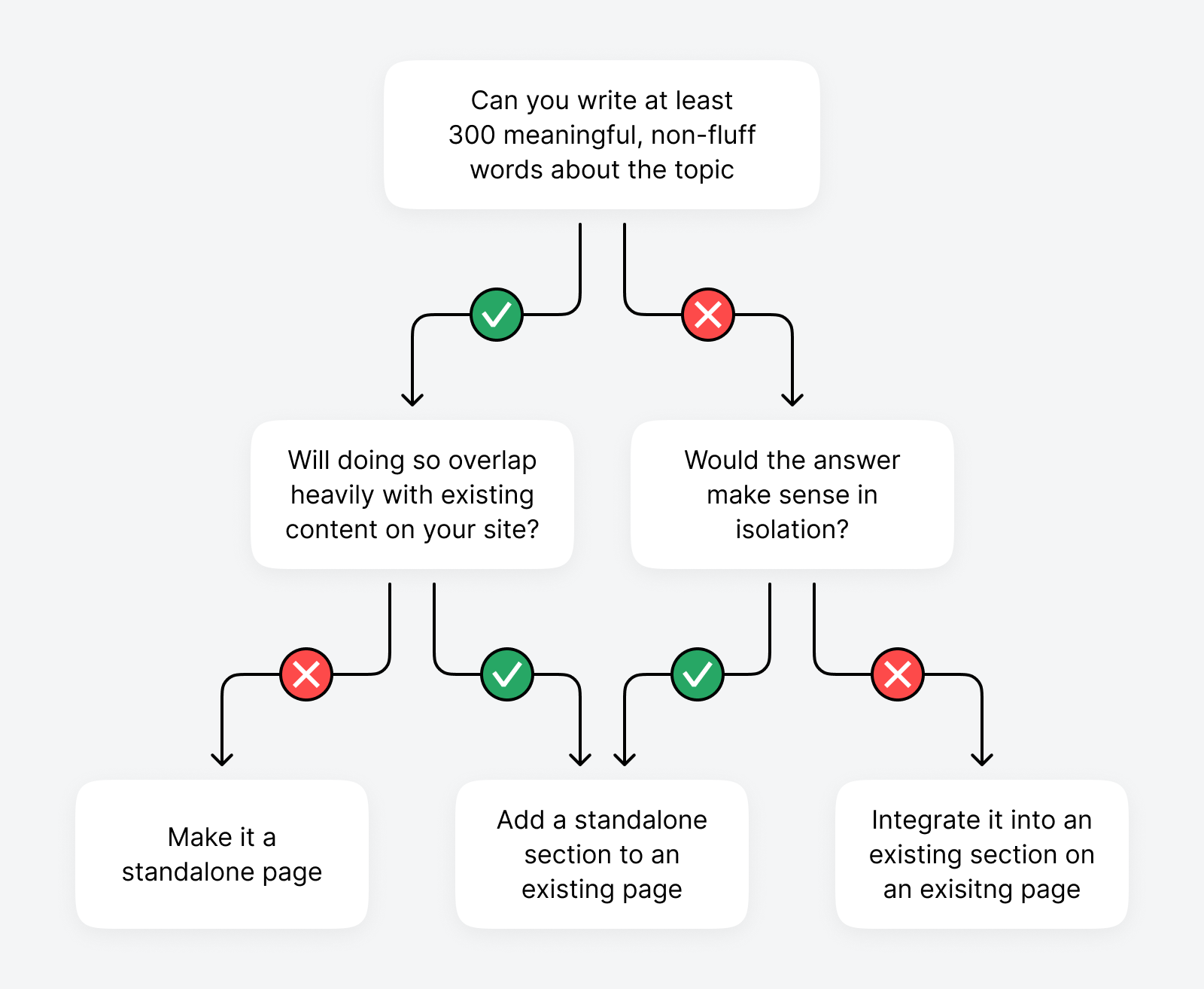

Each topic or cluster represents a potential content atom to add to your website. However, not every atom needs its own page to be answered well.

Some questions and topics make better sense to be answered in an existing article instead.

Whether you target keywords as a page or section within a page comes down to what your website’s core topic is about. For example, if you have a specialist site about xeriscaping, it might make sense to target each question in a separate post where you can go deep into the details.

If you have a general gardening site, perhaps you could add each question as an FAQ to an existing post about xeriscaping instead.

This step ensures every piece of content you create starts with search intent alignment and is structured to function as a standalone unit where possible.

Step 2: Apply BLUF (Bottom Line Up Front)

“Bottom Line Up Front” is a framework for communicating the most important information first, then proceeding with an explanation, example, or supporting details.

To implement it in your writing, start each article (and also each section within an article) with a direct answer or clear statement that fully addresses the core topic.

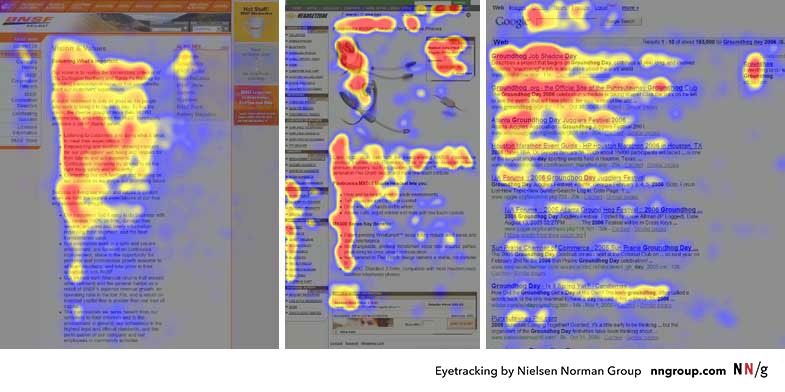

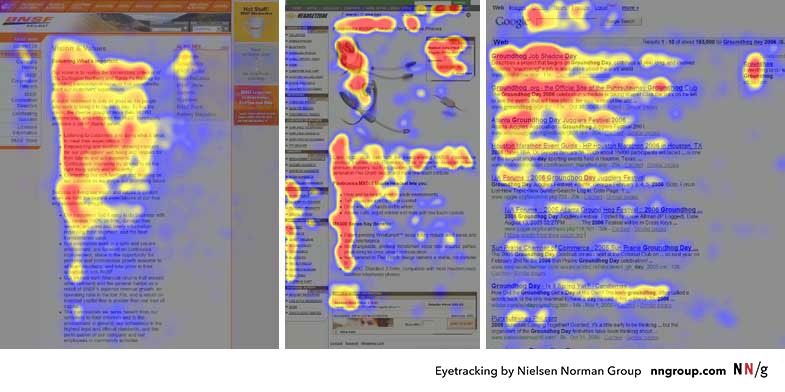

BLUF works because humans scan first, read later.

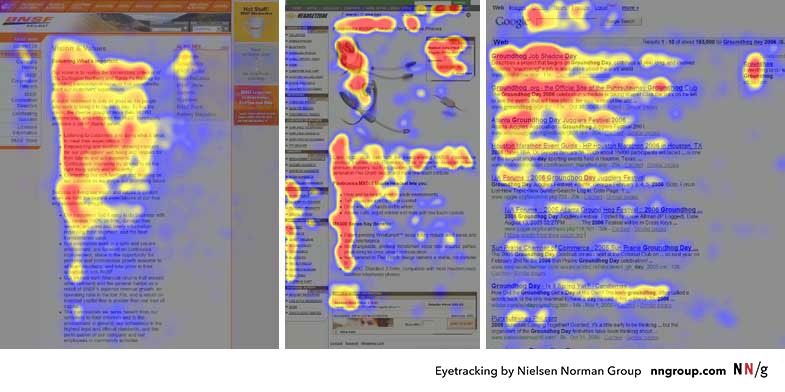

Decades of Nielsen Norman Group research show readers follow an F‑pattern when reading online: heavy focus on the beginning of sections, a sharp drop‑off in the middle, and some renewed attention at the end.

Large language models process text in a surprisingly similar way.

They prioritize the beginning of a document or chunk and show a U‑shaped attention bias by:

- Weighing the early tokens most heavily

- Losing focus in the middle

- Regaining some emphasis toward the end of a sequence

For more insights into how this works, I recommend Dan Petrovic’s in-depth article Human Friendly Content is AI Friendly Content.

By putting the answer first, your content is instantly understandable, retrievable, and citation‑ready, whether for a human skimmer or an AI model embedding your page.

Step 3: Audit for self‑containment

Before publishing, it’s worth scanning your page and asking:

- Is related information grouped logically in your content structure?

- Is each section clear and easy to follow without extra explanation?

- Would a human dropping into this section from a jump link immediately get value?

- Does the section fully answer the intent of its keyword or topic cluster without relying on the rest of the page?

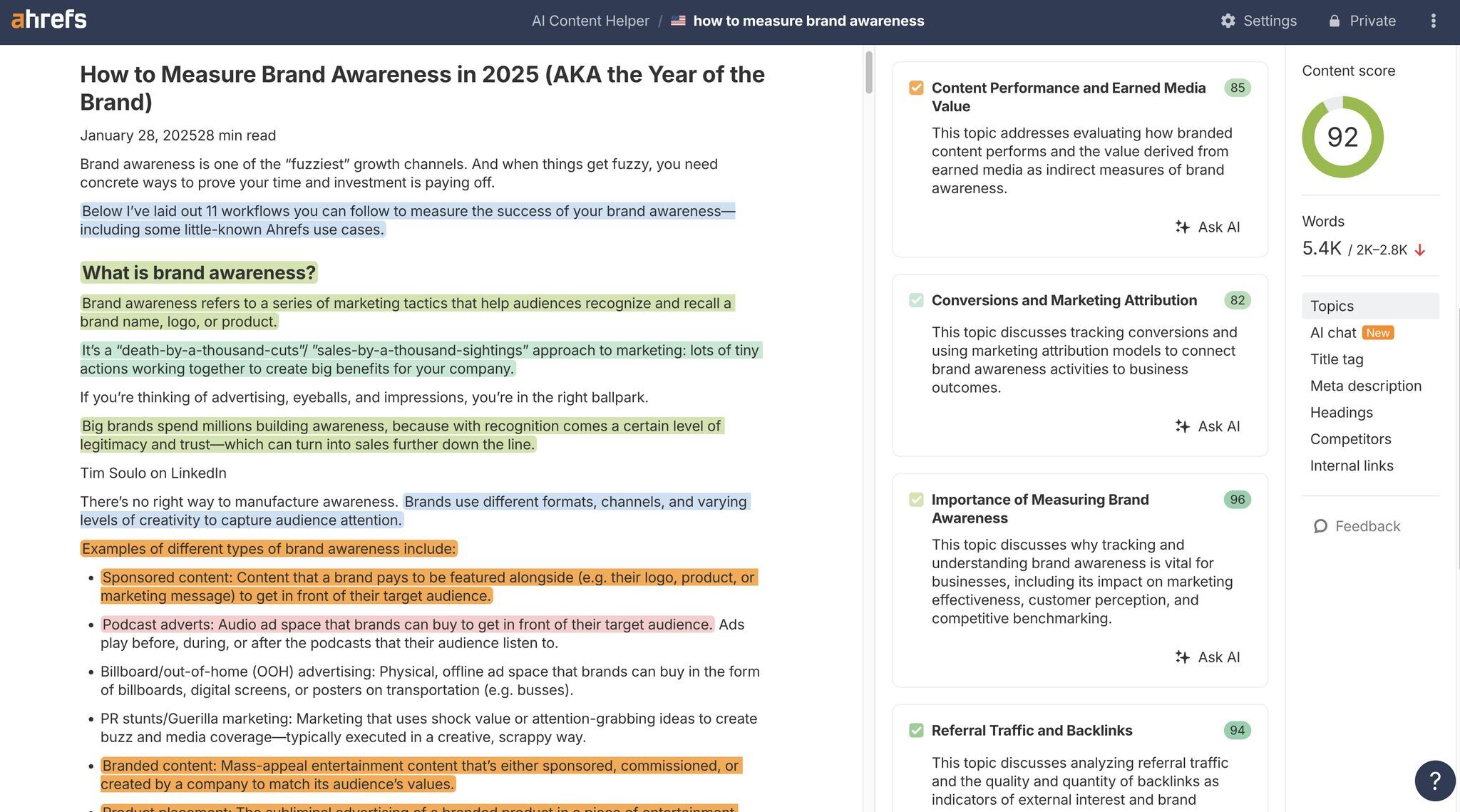

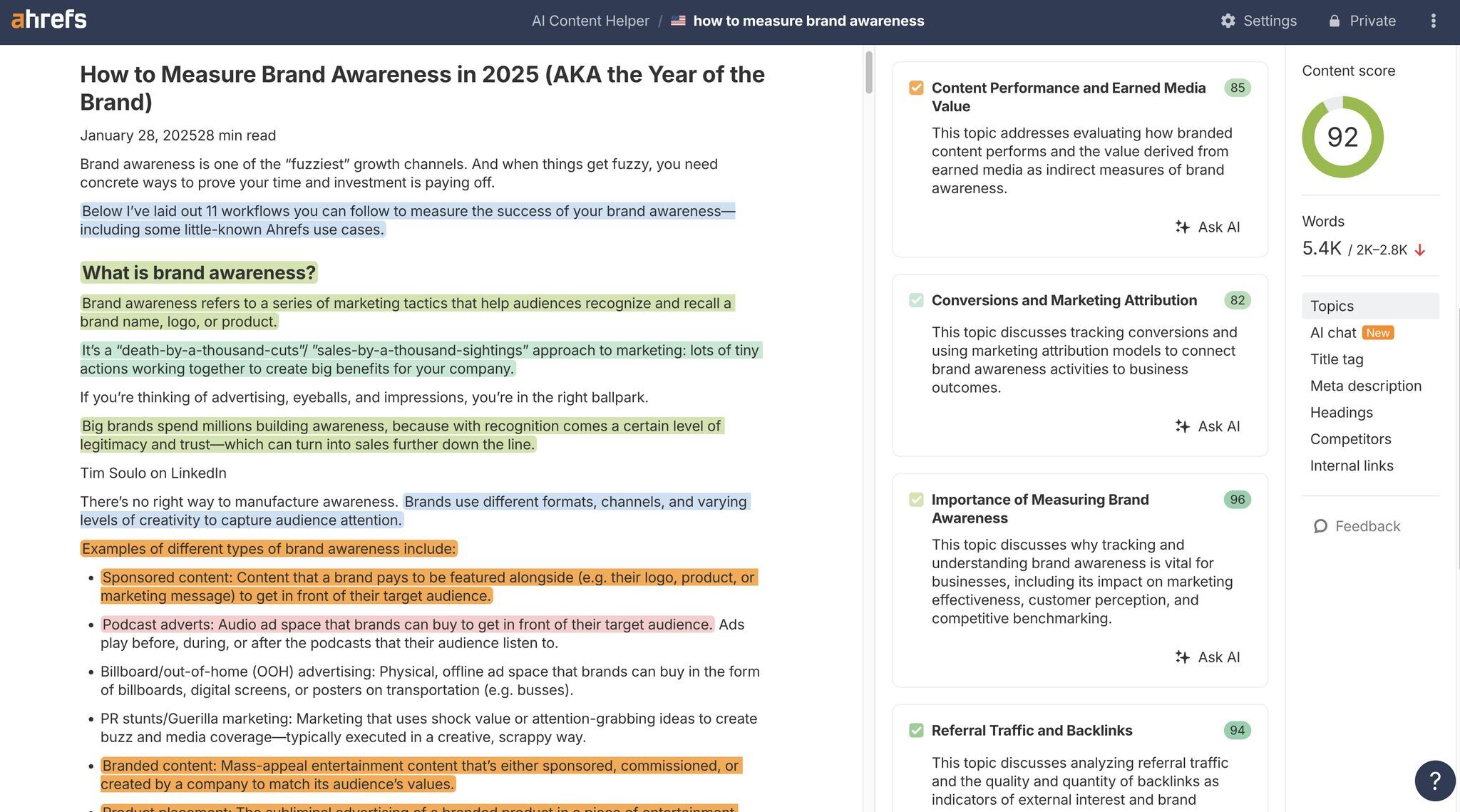

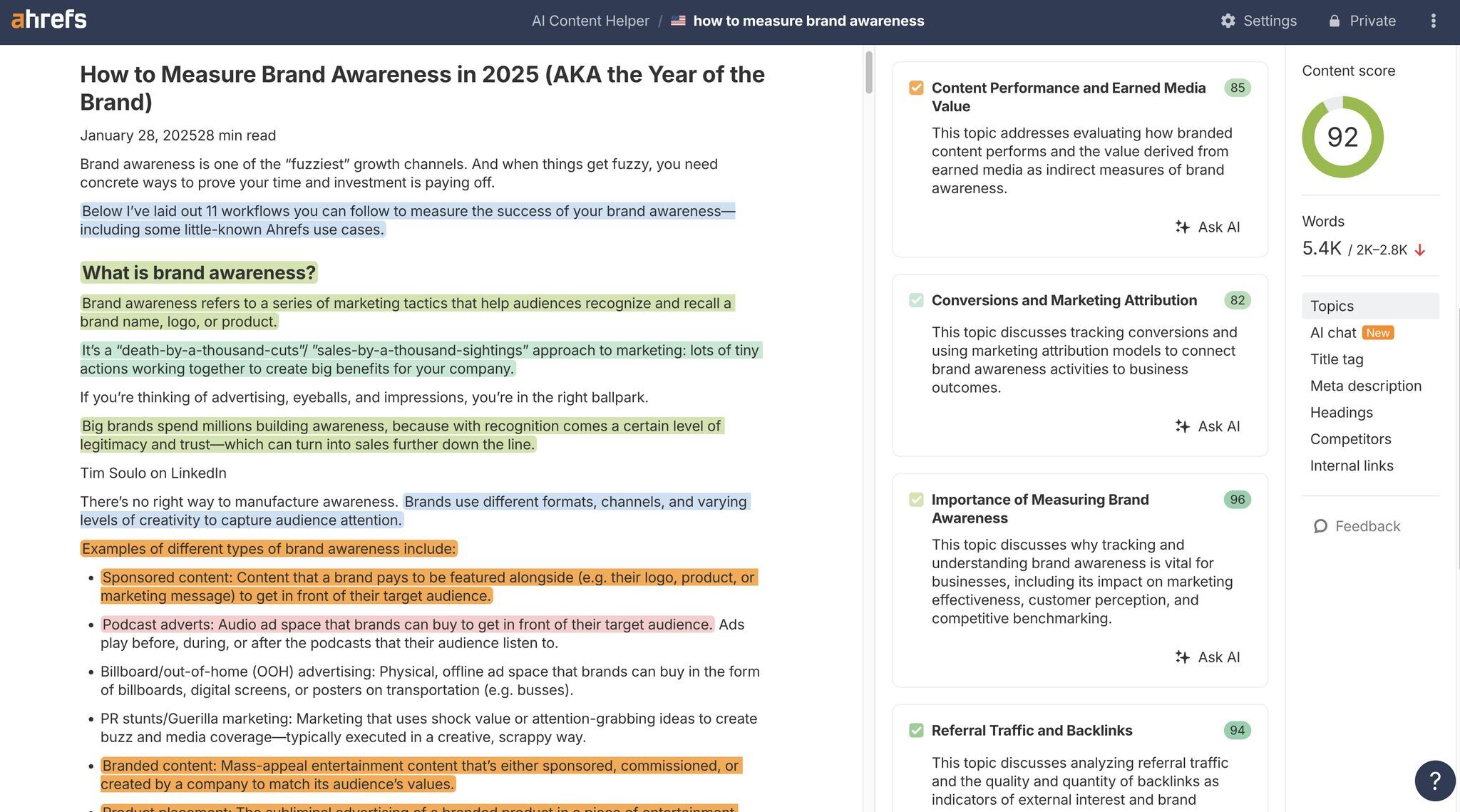

To make this audit faster and more objective, tools like Ahrefs’ AI Content Helper can help visualize your topic coverage.

Its topic‑coloring feature highlights distinct topics across your page, making it easy to spot:

- Overlapping or fragmented sections that should be merged

- Sections that don’t cover a topic completely

- Areas that may require re‑labeling or stronger context

If each section is self‑contained and your topic colors show clear, cohesive blocks, you’ve created atomic content that is naturally optimized for human attention and AI retrieval.

Closing takeaway

Chasing “chunk optimization” is a distraction.

AI systems will always split, embed, and retrieve your content in ways you can’t predict or control, and those methods will evolve as engineers optimize for speed and cost.

The winning approach isn’t trying to game the pipeline. It’s creating clear, self‑contained sections that deliver complete answers, so your content is valuable whether it’s read top‑to‑bottom by a human or pulled into an isolated AI summary.

Similar Posts

30 Ledger Wallet Questions You NEED Answered BEFORE Buying

🚨Get up to $80 in free Bitcoin when you buy a Ledger Wallet through my link:…

Enabling Persistent Memory in Linux for Storage-Intensive Servers

In today’s data-centric landscape, organizations are under constant pressure to process, analyze, and safeguard unprecedented volumes…

How to Do an Actually Useful PPC Competitive Analysis Using AI

A competitive PPC (Pay Per Click) analysis is all about seeing what your competitors are doing…

WPBeginner Spotlight 15: WordPress Admin UI Preview, New Payment Options, and Smarter Site Tools

August has been full of exciting updates in the WordPress ecosystem. From new features in major…

PsiQuantum Secures $1B to Build Fault-Tolerant Million-Qubit Computers

Quantum computing start-up PsiQuantum has secured $1 billion in fresh funding to accelerate its ambitious plan…

Gartner: Regular AI Audits Triple Generative AI Business Value

Enterprises that regularly audit and assess their artificial intelligence (AI) systems are more than three times…

Over time, each model becomes hyper‑personalized to its own performance needs.

AI engineers are incentivized to find even small efficiency gains because every fraction of a cent saved on compute can scale to millions of dollars at the production level.

They routinely test how different chunk sizes, overlaps, and strategies impact retrieval quality, latency, and cost, and make changes whenever the math favors the bottom line.

For example, here’s what such RAG performance experiments look like:

Engineers have run dozens of similar experiments, all testing the best chunking methods for various document types and model preferences.

This means any manual “optimization” you do today can be rendered irrelevant by a model update tomorrow.

3. Manual chunk optimization is a dead end

Ultimately, manual “chunk optimization” is impossible in practice.

Even if you try to write perfect chunks, you have no control over where a model will cut your content.

Chunking is based on tokens, not paragraphs or sentences, and each model decides its own split points dynamically. That means your carefully written intro sentence for a chunk might:

- Land in the middle of a chunk instead of at the start

- Get split in half across two chunks

- Be ignored entirely if the model’s retrieval slices around it

On top of that, Google has already shown what happens to sites that force formatting to game visibility. For example, FAQ‑style content farms once flooded search results with neatly packaged answers to win snippets and visibility.

After recent updates, Google demoted or penalized these sites when recognizing the pattern as manipulative, AI-generated slop, offering low value to readers.

Trying to pre‑structure content for hypothetical AI chunking is the same trap.

You can’t predict how LLMs will tokenize your page today, and any attempt to force it could backfire or become obsolete after the next model update.

keyword and topic research.

Using Ahrefs’ Keywords Explorer, you can find what questions or topics deserve their own sections within a page you’re writing.

For example, on the topic of xeriscaping (a type of gardening), you can answer questions like:

- What is xeriscaping?

- What’s the goal of xeriscaping?

- How much does xeriscaping cost?

- What plants are good for xeriscaping?

Each topic or cluster represents a potential content atom to add to your website. However, not every atom needs its own page to be answered well.

Some questions and topics make better sense to be answered in an existing article instead.

Whether you target keywords as a page or section within a page comes down to what your website’s core topic is about. For example, if you have a specialist site about xeriscaping, it might make sense to target each question in a separate post where you can go deep into the details.

If you have a general gardening site, perhaps you could add each question as an FAQ to an existing post about xeriscaping instead.

This step ensures every piece of content you create starts with search intent alignment and is structured to function as a standalone unit where possible.

Step 2: Apply BLUF (Bottom Line Up Front)

“Bottom Line Up Front” is a framework for communicating the most important information first, then proceeding with an explanation, example, or supporting details.

To implement it in your writing, start each article (and also each section within an article) with a direct answer or clear statement that fully addresses the core topic.

BLUF works because humans scan first, read later.

Decades of Nielsen Norman Group research show readers follow an F‑pattern when reading online: heavy focus on the beginning of sections, a sharp drop‑off in the middle, and some renewed attention at the end.

Large language models process text in a surprisingly similar way.

They prioritize the beginning of a document or chunk and show a U‑shaped attention bias by:

- Weighing the early tokens most heavily

- Losing focus in the middle

- Regaining some emphasis toward the end of a sequence

For more insights into how this works, I recommend Dan Petrovic’s in-depth article Human Friendly Content is AI Friendly Content.

By putting the answer first, your content is instantly understandable, retrievable, and citation‑ready, whether for a human skimmer or an AI model embedding your page.

Step 3: Audit for self‑containment

Before publishing, it’s worth scanning your page and asking:

- Is related information grouped logically in your content structure?

- Is each section clear and easy to follow without extra explanation?

- Would a human dropping into this section from a jump link immediately get value?

- Does the section fully answer the intent of its keyword or topic cluster without relying on the rest of the page?

To make this audit faster and more objective, tools like Ahrefs’ AI Content Helper can help visualize your topic coverage.

Its topic‑coloring feature highlights distinct topics across your page, making it easy to spot:

- Overlapping or fragmented sections that should be merged

- Sections that don’t cover a topic completely

- Areas that may require re‑labeling or stronger context

If each section is self‑contained and your topic colors show clear, cohesive blocks, you’ve created atomic content that is naturally optimized for human attention and AI retrieval.

Closing takeaway

Chasing “chunk optimization” is a distraction.

AI systems will always split, embed, and retrieve your content in ways you can’t predict or control, and those methods will evolve as engineers optimize for speed and cost.

The winning approach isn’t trying to game the pipeline. It’s creating clear, self‑contained sections that deliver complete answers, so your content is valuable whether it’s read top‑to‑bottom by a human or pulled into an isolated AI summary.

Using Ahrefs’ Keywords Explorer, you can find what questions or topics deserve their own sections within a page you’re writing.

For example, on the topic of xeriscaping (a type of gardening), you can answer questions like:

- What is xeriscaping?

- What’s the goal of xeriscaping?

- How much does xeriscaping cost?

- What plants are good for xeriscaping?

Each topic or cluster represents a potential content atom to add to your website. However, not every atom needs its own page to be answered well.

Some questions and topics make better sense to be answered in an existing article instead.

Whether you target keywords as a page or section within a page comes down to what your website’s core topic is about. For example, if you have a specialist site about xeriscaping, it might make sense to target each question in a separate post where you can go deep into the details.

If you have a general gardening site, perhaps you could add each question as an FAQ to an existing post about xeriscaping instead.

This step ensures every piece of content you create starts with search intent alignment and is structured to function as a standalone unit where possible.

Step 2: Apply BLUF (Bottom Line Up Front)

“Bottom Line Up Front” is a framework for communicating the most important information first, then proceeding with an explanation, example, or supporting details.

To implement it in your writing, start each article (and also each section within an article) with a direct answer or clear statement that fully addresses the core topic.

BLUF works because humans scan first, read later.

Decades of Nielsen Norman Group research show readers follow an F‑pattern when reading online: heavy focus on the beginning of sections, a sharp drop‑off in the middle, and some renewed attention at the end.

Large language models process text in a surprisingly similar way.

They prioritize the beginning of a document or chunk and show a U‑shaped attention bias by:

- Weighing the early tokens most heavily

- Losing focus in the middle

- Regaining some emphasis toward the end of a sequence

For more insights into how this works, I recommend Dan Petrovic’s in-depth article Human Friendly Content is AI Friendly Content.

By putting the answer first, your content is instantly understandable, retrievable, and citation‑ready, whether for a human skimmer or an AI model embedding your page.

Step 3: Audit for self‑containment

Before publishing, it’s worth scanning your page and asking:

- Is related information grouped logically in your content structure?

- Is each section clear and easy to follow without extra explanation?

- Would a human dropping into this section from a jump link immediately get value?

- Does the section fully answer the intent of its keyword or topic cluster without relying on the rest of the page?

To make this audit faster and more objective, tools like Ahrefs’ AI Content Helper can help visualize your topic coverage.

Its topic‑coloring feature highlights distinct topics across your page, making it easy to spot:

- Overlapping or fragmented sections that should be merged

- Sections that don’t cover a topic completely

- Areas that may require re‑labeling or stronger context

If each section is self‑contained and your topic colors show clear, cohesive blocks, you’ve created atomic content that is naturally optimized for human attention and AI retrieval.

Closing takeaway

Chasing “chunk optimization” is a distraction.

AI systems will always split, embed, and retrieve your content in ways you can’t predict or control, and those methods will evolve as engineers optimize for speed and cost.

The winning approach isn’t trying to game the pipeline. It’s creating clear, self‑contained sections that deliver complete answers, so your content is valuable whether it’s read top‑to‑bottom by a human or pulled into an isolated AI summary.

Similar Posts

30 Ledger Wallet Questions You NEED Answered BEFORE Buying

🚨Get up to $80 in free Bitcoin when you buy a Ledger Wallet through my link:…

Enabling Persistent Memory in Linux for Storage-Intensive Servers

In today’s data-centric landscape, organizations are under constant pressure to process, analyze, and safeguard unprecedented volumes…

How to Do an Actually Useful PPC Competitive Analysis Using AI

A competitive PPC (Pay Per Click) analysis is all about seeing what your competitors are doing…

WPBeginner Spotlight 15: WordPress Admin UI Preview, New Payment Options, and Smarter Site Tools

August has been full of exciting updates in the WordPress ecosystem. From new features in major…

PsiQuantum Secures $1B to Build Fault-Tolerant Million-Qubit Computers

Quantum computing start-up PsiQuantum has secured $1 billion in fresh funding to accelerate its ambitious plan…

Gartner: Regular AI Audits Triple Generative AI Business Value

Enterprises that regularly audit and assess their artificial intelligence (AI) systems are more than three times…